Databricks & Snowflake Summits 2025 aftermath - Part 1: Catalog Wars

The Data Catalog Wars Heat Up: What We Learned from Conference Season

Now that Databricks and Snowflake summits are behind us, it's time to look at where the data world is headed. Both conferences spent serious keynote time on data catalogs - and for good reason. This kicks off our series analyzing the most interesting announcements from conference season.

The Catalog Wars

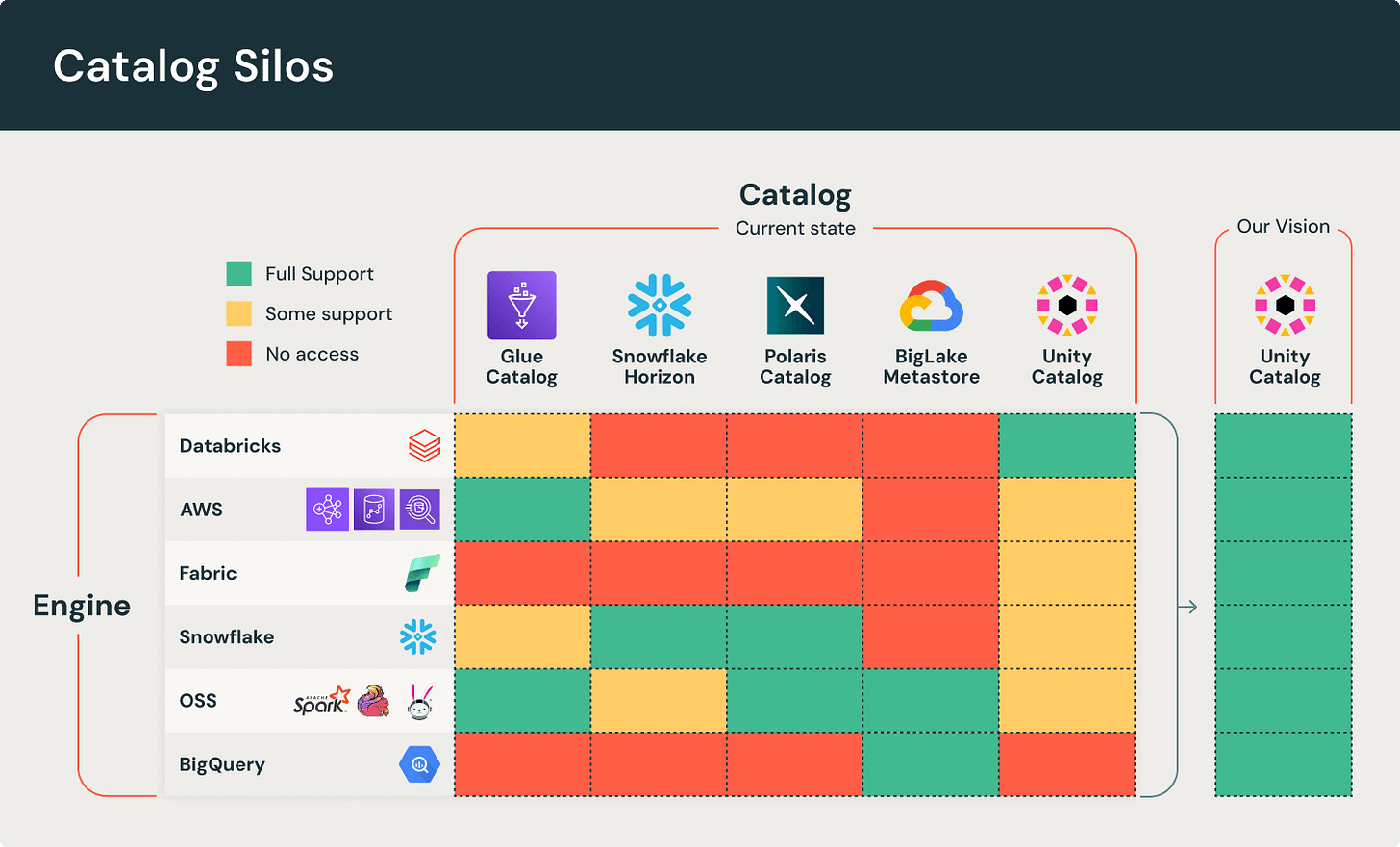

During his Day 2 keynote, Matei Zaharia Co-founder and CTO of Databricks showed a slide about the "crowded data catalog market". His message was clear: too many vendors are creating walled gardens where you need their compute even to access your own data.

He mostly targeted Snowflake afterward, which has both Apache Polaris and a closed garden catalog, thus positioning the race as a race between platforms. But at this point, there is no clear winner in the data catalog wars. Here are the 3 scenarios:

Scenario 1 - Platform Wins: Unity / Polaris

Unity Catalog brings a lot to the table - now "fully supports" Apache Iceberg AND Delta Lake. With over 10,000 enterprises already using it, they've built impressive governance features like attribute-based access control and automated lineage tracking. The big differentiator? It manages AI assets too - ML models, functions, the works.

Apache Polaris takes a different path. It's all about being vendor-neutral and Iceberg-native. If you're running a multi-engine environment with Spark, Flink, and Trino, Polaris gives you that flexibility without forcing you into one vendor's ecosystem. The main advantage of using a platform's catalog is the deep integration within the specific platform - permissions, lineage, performance and more. Also, they are backed by giants.

Scenario 2 - Storage Vendor Wins: S3 Tables

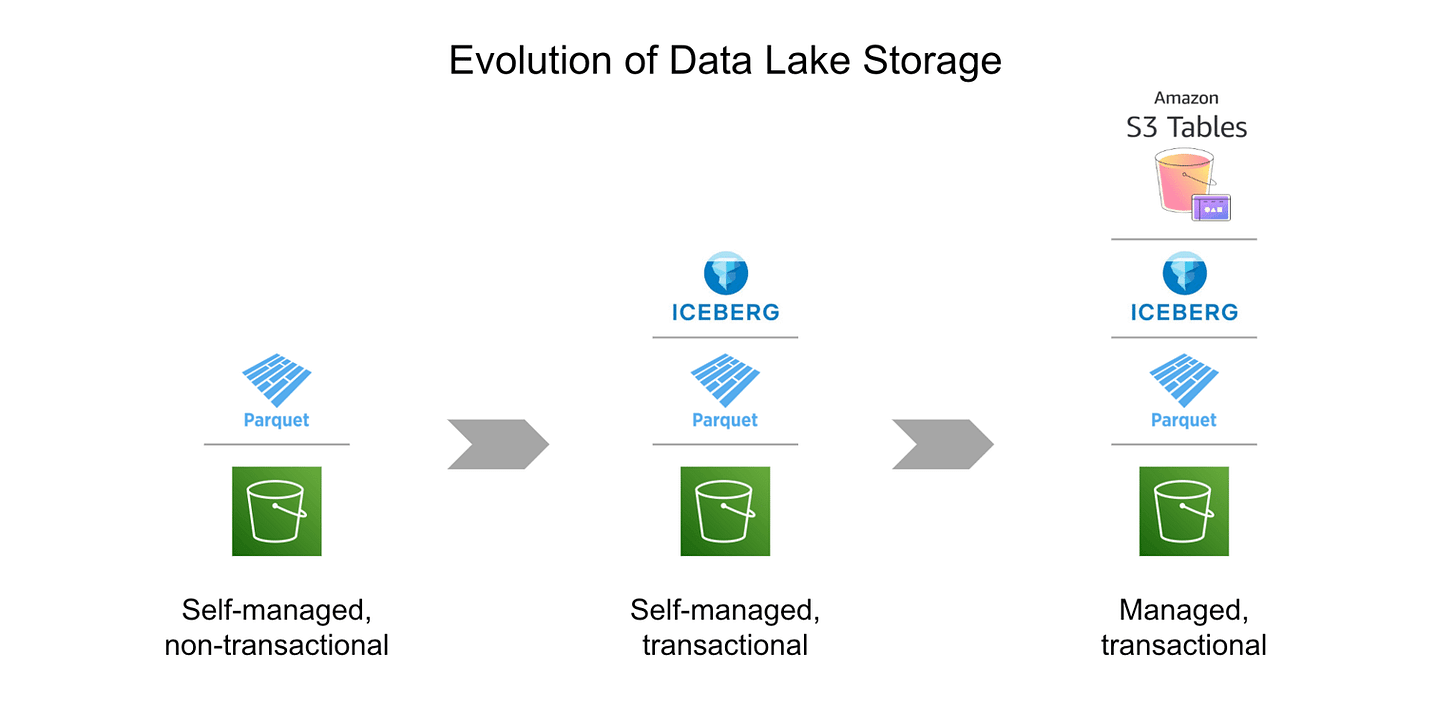

Amazon's S3 Tables launched with big promises - the first object store with built-in Iceberg support. Six months later? The results are a mixed bag.

The good: seamless integration with compute performance, permissions and retention - something almost impossible to do without being the compute layer. The not-so-good: It's very AWS-centric and not open sourced. While it supports Iceberg format, it does not support Delta Lake and the vendor lock-in concerns are real. Plus, their compaction fees are pretty expensive compared to the competition.

Scenario 3 - Open Source Community Wins

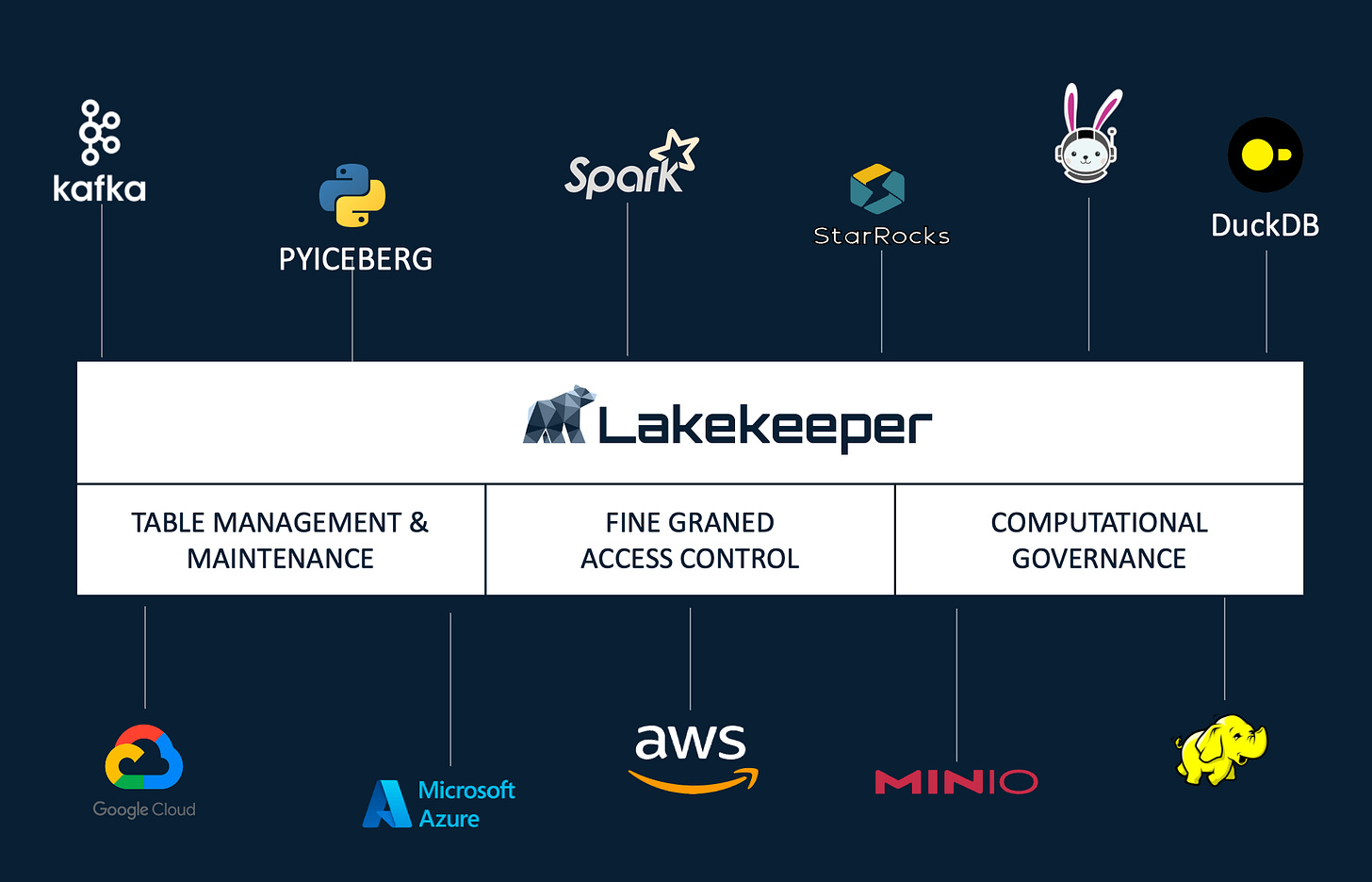

The open-source world isn't sitting still. Projects such as Lakekeeper, built in Rust, focus on performance and security with fine-grained access control. Project Nessie pioneered "Git for data" with branching and version control for your entire catalog.

Given the history of big data as ruled by open-source communities winning versus much bigger enterprises, I would not underestimate this scenario.

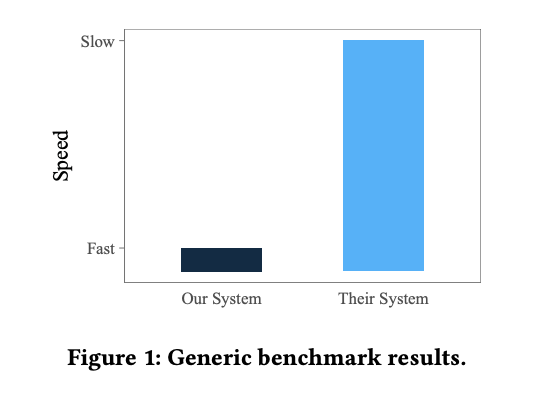

The Benchmark Problem

Here's the frustrating part: we can't reliably compare catalog performance. Unlike databases with TPCH/TPCDS benchmarks, catalogs lack standards. Vendor benchmarks? "Complete fabrications, never to be trusted."

[A figure from actual paper called Fair Benchmarking Considered Difficult: Common Pitfalls In Database Performance Testing]

Does the "complete support for Apache Iceberg" that Databricks announced actually improve performance? Who knows.

The Bottom Line

Six months ago, everyone thought S3 Tables would disrupt everything. Today, the journey to a clear catalog leader continues. But here's what's exciting: everyone is going open source. Unity, Polaris, and the community projects are all embracing openness.

This isn't just vendor posturing - it's a fundamental shift. When platforms open-source their core governance technology, it creates real opportunities for innovation and reduces lock-in risks.

The catalog wars aren't ending; they're evolving into a competition for the best open implementation. And that's good news for all of us building modern data platforms.

What’s Next

In Part 2 we will discuss Databricks LakeBase announcement, and on the real-time layer of the modern data stuck.